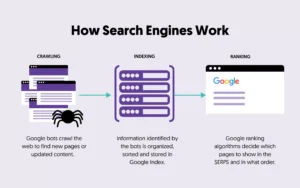

Understanding robots.txt and sitemaps is crucial for website SEO. These tools help search engines crawl and index your site efficiently.

Imagine having a website but nobody can find it. That’s where robots. txt and sitemaps come in. These files guide search engines on what to crawl and what to ignore. Robots. txt helps control access to your site’s parts, while sitemaps list the pages you want indexed.

Together, they ensure your site is search-engine friendly. This improves visibility and ranking. In this blog, we’ll explore how these tools work, why they are important, and how to create them. Let’s dive in and make your website more discoverable!

Introduction To Robots.txt

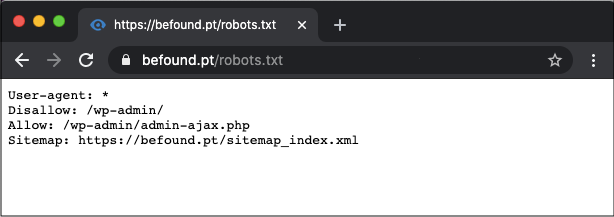

Understanding how search engines navigate your site is crucial. One of the primary tools to guide them is the robots.txt file. This small but powerful text file can help you control which parts of your website are accessible to search engine crawlers.

What Is Robots.txt?

The robots.txt file is a text document in your website’s root directory. It tells search engine crawlers which pages they can or cannot visit. Think of it as a set of instructions for search engines.

| Attribute | Description |

|---|---|

| User-agent | Specifies which crawler the rule applies to. |

| Disallow | Defines the pages or directories that should not be crawled. |

| Allow | Specifies the pages or directories that can be crawled. |

Purpose And Importance

The purpose of robots.txt is to manage search engine activity on your site. It can help:

- Prevent overloading your server.

- Protect sensitive information.

- Direct search engines to prioritize specific content.

The importance of robots.txt lies in its ability to improve your site’s SEO health. By guiding crawlers properly, you ensure that the most relevant content is indexed. This helps improve your website’s search engine ranking.

User-agent:

Disallow: /private/

Allow: /public/

In the above code, all user-agents are instructed to avoid the /private/ directory but are allowed to access the /public/ directory.

Proper use of robots.txt can make a big difference. It is a simple yet effective way to manage how search engines interact with your site.

Introduction To Sitemaps

Sitemaps play a crucial role in search engine optimization (SEO). They help search engines understand your website’s structure. This improves your chances of being indexed properly. Let’s explore what sitemaps are and their different types.

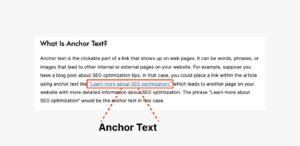

What Is A Sitemap?

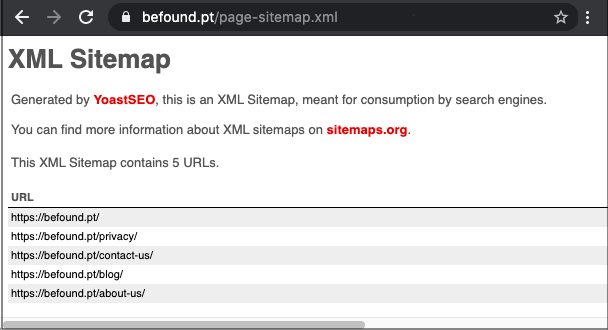

A sitemap is a file that lists the pages of your website. This file informs search engines about the organization of your site. Think of it as a roadmap for search engines.

There are two main formats of sitemaps:

- XML sitemaps – Designed for search engines

- HTML sitemaps – Designed for users

Both formats help improve the user experience and site indexing.

Types Of Sitemaps

There are different types of sitemaps based on their purpose:

| Type | Description |

|---|---|

| XML Sitemap | List URLs for a site, helping search engines crawl efficiently. |

| HTML Sitemap | Aids users in navigating a website. |

| Video Sitemap | Specifies information about video content on your site. |

| Image Sitemap | Provides data about images on a website. |

Each type of sitemap serves a unique purpose. They all contribute to the overall health of your website. Understanding and using these sitemaps can enhance your SEO efforts.

Creating An Effective Robots.txt

Creating an effective robots.txt file is crucial for guiding search engines. It helps them understand which pages to crawl and index. This file can improve your website’s SEO and protect sensitive information. Knowing its basic syntax and structure ensures proper implementation.

Basic Syntax And Structure

The robots.txt file resides in your website’s root directory. It uses a simple syntax to communicate with web crawlers. Each rule starts with a user-agent line. This specifies which crawler the rule applies to. The next line contains the directive. It tells the crawler what to do.

A basic example looks like this:

User-agent:

Disallow: /private/

In this example, all crawlers are disallowed from accessing the /private/ directory. The asterisk () means the rule applies to all crawlers.

Common Directives

Several common directives help you control crawler behavior. The Disallow directive blocks access to specified paths. For example:

Disallow: /admin/

This blocks crawlers from accessing the /admin/ directory. The Allow directive permits access to specific paths. It is useful within disallowed directories. For example:

Allow: /admin/public/

This allows access to the /admin/public/ directory, even if /admin/ is disallowed. The Sitemap directive informs crawlers about the location of your sitemap. It looks like this:

Sitemap: http://www.example.com/sitemap.xml

Including a sitemap directive ensures crawlers find your sitemap easily. It helps them index your site more efficiently.

Building Comprehensive Sitemaps

A comprehensive sitemap is essential for a successful website. It helps search engines find and index your web pages. This improves your site’s visibility and ranking. Let’s explore the key elements and best practices for building comprehensive sitemaps.

Essential Elements

To create an effective sitemap, include the following essential elements:

- URLs: List all the URLs you want search engines to index.

- Last Modified Date: Include the last update date for each URL. This helps search engines know when content has changed.

- Priority: Assign a priority level to each URL. This tells search engines which pages are most important.

- Change Frequency: Indicate how often each page is likely to change. This helps search engines decide how often to crawl the page.

Here is an example of a basic XML sitemap:

xml version="1.0" encoding="UTF-8"?

http://www.example.com/

2023-10-01

monthly

1.0

http://www.example.com/about/

2023-09-15

yearly

0.8

Best Practices

Follow these best practices to ensure your sitemap is effective:

- Keep It Updated: Regularly update your sitemap. Add new pages and remove outdated ones.

- Limit URLs: Include no more than 50,000 URLs per sitemap. If you have more, use multiple sitemaps.

- Use Sitemap Index: If you have multiple sitemaps, create a sitemap index file. This file lists all your sitemaps.

- Submit to Search Engines: Submit your sitemap to search engines. Use Google Search Console and Bing Webmaster Tools.

- Validate Your Sitemap: Use online tools to check for errors in your sitemap. Ensure it meets XML standards.

By following these practices, your sitemap will be a valuable tool. It will help search engines understand and index your site more effectively.

Integrating Robots.txt With Sitemaps

Integrating robots.txt with sitemaps is essential for effective website indexing. This integration guides search engines on which pages to crawl. It also helps in optimizing your site’s visibility in search results.

Ensuring Compatibility

Ensure the robots.txt file and sitemap are compatible. The robots.txt file should reference the sitemap. This tells search engines where to find the sitemap. Use the following code to include the sitemap in the robots.txt file:

User-agent:

Disallow: /private/

Sitemap: https://www.example.com/sitemap.xml

Always test the robots.txt file for errors. Use Google’s Robots.txt Tester. It helps check if the file blocks important pages. Confirm that the sitemap URL is correct.

Avoiding Common Mistakes

Mistakes can affect site indexing. Avoid these common errors:

- Not updating the sitemap URL in the robots.txt file

- Blocking essential pages with the robots.txt file

- Incorrectly formatting the robots.txt file

- Forgetting to test the file for errors

Keep the robots.txt file simple. Complex rules can confuse search engines. Regularly review and update the file.

| Mistake | Solution |

|---|---|

| Outdated Sitemap URL | Update robots.txt with the correct sitemap URL |

| Blocking Important Pages | Review and remove unnecessary disallow rules |

| Incorrect Formatting | Follow standard robots.txt syntax rules |

| Not Testing | Use tools to test and verify the file |

Seo Benefits Of Robots.txt

The Robots.Txt file plays a crucial role in SEO strategies. It offers several benefits that can enhance your website’s performance and visibility. Understanding these benefits can help you optimize your site effectively.

Controlling Web Crawlers

One major benefit of Robots.Txt is the control it offers over web crawlers. You can specify which parts of your site should not be crawled. This helps in keeping sensitive or irrelevant pages out of search engine indexes. It allows search engines to focus on more important pages. By doing this, you enhance your site’s overall SEO.

Enhancing Site Performance

Using Robots.Txt can also improve your site’s performance. By blocking unnecessary pages from being crawled, you save your server’s resources. This can lead to faster load times and better user experience. Faster websites often rank higher in search engine results. Better performance can also reduce bounce rates. This is another positive for your SEO efforts.

Seo Benefits Of Sitemaps

Sitemaps are essential tools for SEO. They help search engines understand your website structure. This improves the chances of better indexing. A well-structured sitemap can significantly boost your site’s visibility. Here we will explore the SEO benefits of sitemaps.

Improving Crawl Efficiency

Search engines use crawlers to index web pages. A sitemap makes this process faster. It lists all your site pages in a structured manner. This helps crawlers find and index your content efficiently. It also ensures that no pages are missed during indexing.

Crawl efficiency can be improved by:

- Including important pages in the sitemap

- Keeping the sitemap updated with new content

- Removing outdated or irrelevant pages

With a clear sitemap, search engines can prioritize crawling important pages. This reduces the risk of missing out on key content. It also minimizes the time spent on irrelevant pages.

Boosting Indexation

A sitemap helps boost indexation by providing a clear roadmap of your site. It ensures that all pages, including deeper links, are discovered. This is especially important for new or large websites.

Key benefits of boosted indexation include:

- Enhanced visibility of new content

- Improved rankings for all indexed pages

- Faster updates in search engine results

Sitemaps also help with indexing multimedia content. This includes images, videos, and other non-text content. By including these in your sitemap, you ensure they are indexed. This can improve the overall visibility and ranking of your site.

In summary, sitemaps are crucial for SEO. They improve crawl efficiency and boost indexation. This leads to better visibility and higher rankings in search engines.

Credit: www.dopinger.com

Tools For Managing Robots.txt And Sitemaps

Managing Robots.Txt and Sitemaps is crucial for website optimization. Proper management ensures search engines crawl and index your site correctly. Using the right tools can simplify this process and enhance your site’s visibility.

Popular Tools

Various tools help manage Robots.Txt and Sitemaps efficiently. Below are some of the most popular ones:

- Google Search Console: Allows you to test and submit

robots.txtfiles and sitemaps. - Yoast SEO: A WordPress plugin that helps generate and manage

robots.txtand sitemaps. - Screaming Frog SEO Spider: Analyzes your site, helping you detect issues in

robots.txtand sitemaps. - XML Sitemaps: Generates sitemaps for your website, ensuring all pages are indexed.

Tips For Effective Management

Managing Robots.Txt and Sitemaps effectively requires some best practices. Follow these tips for better results:

- Regular Updates: Keep your

robots.txtand sitemaps updated with new content. - Test Before Uploading: Always test your

robots.txtfile using Google Search Console. - Use Simple Syntax: Ensure your

robots.txtfile uses simple and correct syntax. - Include All Pages: Make sure your sitemap includes all important pages of your site.

- Avoid Blocking Essential Pages: Do not block pages you want search engines to index.

Using these tools and tips can improve your site’s performance and visibility. Proper management of Robots.Txt and Sitemaps is key to successful SEO.

Case Studies And Success Stories

Case studies and success stories provide valuable insights into how businesses have effectively used robots.txt and sitemaps to improve their website’s SEO performance. By analyzing real-world examples, we can uncover practical lessons and strategies that can be applied to your own website.

Real-world Examples

Let’s explore some real-world examples of companies that have successfully utilized robots.txt and sitemaps:

| Company | Strategy | Outcome |

|---|---|---|

| Company A | Optimized their robots.txt to block duplicate content | Improved crawl efficiency and ranking |

| Company B | Implemented a sitemap to index new content quickly | Increased organic traffic by 30% |

| Company C | Combined a dynamic sitemap with effective robots.txt rules | Enhanced site visibility and user experience |

Lessons Learned

From these examples, we can draw several key lessons:

- Optimize robots.txt: Blocking unnecessary pages can improve crawl efficiency.

- Use sitemaps: Ensure all important pages are indexed quickly.

- Combine strategies: Use both robots.txt and sitemaps for better results.

- Regular updates: Keep your sitemaps and robots.txt files up-to-date with site changes.

These strategies demonstrate how effective robots.txt and sitemaps can be in enhancing SEO performance. By learning from these real-world examples, you can apply similar tactics to improve your own website’s visibility and traffic.

Credit: www.woorank.com

Conclusion And Next Steps

Understanding Robots.txt and Sitemaps is crucial for SEO. These tools help search engines index your site effectively. This section will recap key points and suggest future strategies.

Recap Of Key Points

- Robots.txt files guide search engines on which pages to crawl.

- Sitemaps provide a roadmap for search engines to follow.

- Both are essential for SEO and site indexing.

- Ensure your Robots.txt file does not block important pages.

- Regularly update your Sitemap as your site evolves.

Future Strategies

- Review your Robots.txt file monthly.

- Check for any accidental disallows.

- Generate a new Sitemap after significant updates.

- Submit your Sitemap to search engines.

- Monitor your site’s indexing status via Google Search Console.

Implement these strategies to keep your site optimized. Regular maintenance of Robots.txt and Sitemaps is key for long-term success.

Credit: www.aemtutorial.info

Frequently Asked Questions

What Is A Robots.txt File?

A robots. txt file is a text file used to control web crawlers. It instructs search engines which pages to crawl or not.

Why Is A Sitemap Important?

A sitemap is important because it helps search engines understand your website structure. It ensures all pages are indexed.

How Do I Create A Robots.txt File?

To create a robots. txt file, use a plain text editor. Specify rules for web crawlers and upload it to your website’s root directory.

Can I Have Multiple Sitemaps?

Yes, you can have multiple sitemaps. This is especially useful for large websites. Use a sitemap index file to list all sitemaps.

Conclusion

Understanding robots. txt and sitemaps boosts your website’s search engine visibility. These tools guide search engines to your site’s important content. Implementing them properly enhances your site’s SEO performance. Keep your robots. txt file updated and your sitemap accurate. This ensures search engines index your site correctly.

Regularly check for errors and fix them promptly. This keeps your site friendly for both users and search engines. Remember, clear and organized navigation improves user experience. Investing time in these areas pays off in better search rankings and more visitors.

Ms.Sultana brings over 16 years of expertise working with global Clients by providing different skills and Services. For the last 5 years working as an Affiliate marketer, specializing in high-ticket campaigns that drive exponential growth. She holds a degree in Computer Science and Engineering as well as achieved many more skills certificates from different institute/academies/Platform. As part of the Elite Global Marketing team, Sultana has helped clients generate millions in revenue through strategic partnerships, innovative funnels, and data-driven insights.